房价预测和之前的题就不一样了,算是回归问题。插一句,逻辑回归不是回归模型,是分类模型,因为它出来的结果是 0 和 1 的分类。

数据

train_file_path = "train.csv"

dataset_df = pd.read_csv(train_file_path)

print("Full train dataset shape is {}".format(dataset_df.shape))

因为Id没有什么用,可以 drop 掉。

dataset_df = dataset_df.drop('Id', axis=1)

dataset_df.head(3)

分析数据

将数据分为数字型和类别型两组。

df_num = dataset_df.select_dtypes(include = ['float64', 'int64'])

df_cat = dataset_df.select_dtypes(include = ['object'])

类别型数据

空值处理

类别型数据有空值需要处理

df_cat.isnull().sum().sort_values(ascending=False)

df_cat['PoolQC'] = df_cat['PoolQC'].fillna('No Pool')

df_cat['MiscFeature'] = df_cat['MiscFeature'].fillna('No Misc')

df_cat['Alley'] = df_cat['Alley'].fillna('No Alley')

df_cat['Fence'] = df_cat['Fence'].fillna('No Fence')

df_cat['MasVnrType'] = df_cat['MasVnrType'].fillna('No Masonry')

df_cat['FireplaceQu'] = df_cat['FireplaceQu'].fillna('No Fireplace')

df_cat['FireplaceQu'] = df_cat['FireplaceQu'].fillna('No Fireplace')

for column in ('GarageCond', 'GarageType', 'GarageFinish', 'GarageQual'):

df_cat[column] = df_cat[column].fillna('No Garage')

for column in ('BsmtFinType2', 'BsmtExposure', 'BsmtQual', 'BsmtCond', 'BsmtFinType1'):

df_cat[column] = df_cat[column].fillna('No Basement')

再次查看数据,可以发现类别型的数据 unique 值都比较小,适合做独热编码。

df_cat.describe()

独热编码

df_cat = pd.get_dummies(df_cat)

数字型数据

空值处理

可以填 0,也可以填均值,看业务需求。

df_num.isnull().sum().sort_values(ascending=False)

df_num['GarageYrBlt'] = df_num['GarageYrBlt'].fillna(0)

df_num['LotFrontage'] = df_num['LotFrontage'].fillna(df_num['LotFrontage'].mean())

df_num.isnull().sum().sort_values(ascending=False)

筛选数据

为了提高模型的泛化能力,选取标签在 99%范围内的数据,这样可以排除一些异常数据。

df_data = pd.concat([df_num, df_cat], axis=1)

df_data = df_data[df_data['SalePrice'] <= np.quantile(df_data['SalePrice'], 0.99)].copy()

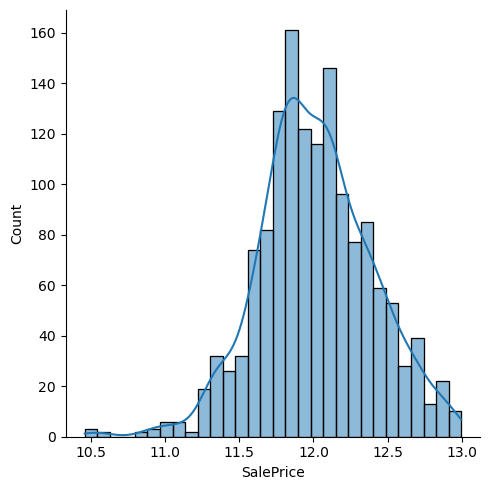

对于标签,我们做对数处理,让模型更容易捕捉到特征里面的关系。

y = df_data['SalePrice']

X = df_data.drop('SalePrice', axis=1)

y = np.log(y)

训练模型

拆分数据集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split (X, y, test_size=0.2, random_state=20)

先用 RandomForestRegressor 试一下

from sklearn.ensemble import RandomForestRegressor

regr = RandomForestRegressor(n_estimators=300, max_depth=7, verbose=1)

regr.fit(X_train, y_train)

y_pred = regr.predict(X_test)

from sklearn.metrics import mean_absolute_error, mean_squared_error

print('mae: ', mean_absolute_error(y_test, y_pred))

print('mse: ', mean_squared_error(y_test, y_pred))

跑了一下,MSE 是 0.016705。

换一个模型,再搜索一个超参。

from xgboost import XGBRegressor

params = {'lambda': 5.08745375747522, 'alpha': 1.3840557746026854, 'colsample_bytree': 0.3, 'subsample': 0.7, 'learning_rate': 0.0705794117436359, 'max_depth': 5, 'random_state': 1, 'min_child_weight': 9}

reg = XGBRegressor(eval_metric= 'rmse', n_estimators = 1433, **params)

reg.fit(X_train, y_train,

eval_set=[(X_train, y_train), (X_test, y_test)],

verbose=True)

y_pred = reg.predict(X_test)

print('mae: ', mean_absolute_error(y_test, y_pred))

print('mse: ', mean_squared_error(y_test, y_pred))

跑了一下,MSE 是 0.01215297。

过滤不重要的特征

根据模型,可以找到不重要的特征。

importances = pd.DataFrame(columns = ['feature_importances'], data = reg.feature_importances_, index = reg.feature_names_in_).sort_values(by = 'feature_importances', ascending = False)

zero_importance= list(importances.loc[importances['feature_importances'] == 0].reset_index()['index'])

importances

将这些特征废弃掉,再次训练模型。

from xgboost import XGBRegressor

from sklearn.model_selection import train_test_split, cross_val_score

from sklearn.linear_model import LinearRegression

params = {'lambda': 5.08745375747522, 'alpha': 1.3840557746026854, 'colsample_bytree': 0.3, 'subsample': 0.7, 'learning_rate': 0.0705794117436359, 'max_depth': 5, 'random_state': 1, 'min_child_weight': 9, 'early_stopping_rounds': 50}

X = df_data.drop('SalePrice', axis = 1)

X = X.drop(zero_importance, axis = 1)

y = df_data['SalePrice']

y = np.log(y)

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size = 0.3, random_state=1)

reg = XGBRegressor(eval_metric= 'rmse', n_estimators = 1433, **params)

reg.fit(X, y,

eval_set=[(X_train, y_train), (X_test, y_test)],

verbose=100)

y_pred = reg.predict(X_test)

得到新的 MSE 0.0033

mse = mean_squared_error(y_test, y_pred)

预测

对于预测集,做同样的处理,缺失的列补 0,只是最后预测结果需要np.exp处理一次。

missing_columns = [i for i in x.columns if i not in test.columns]

for i in missing_columns:

test[i] = 0

test = test[x.columns]

preds = np.exp(reg.predict(test))

output = pd.DataFrame({'Id': test['Id'], 'SalePrice': preds})